How to Tell if a Political Poll is Worth Reading

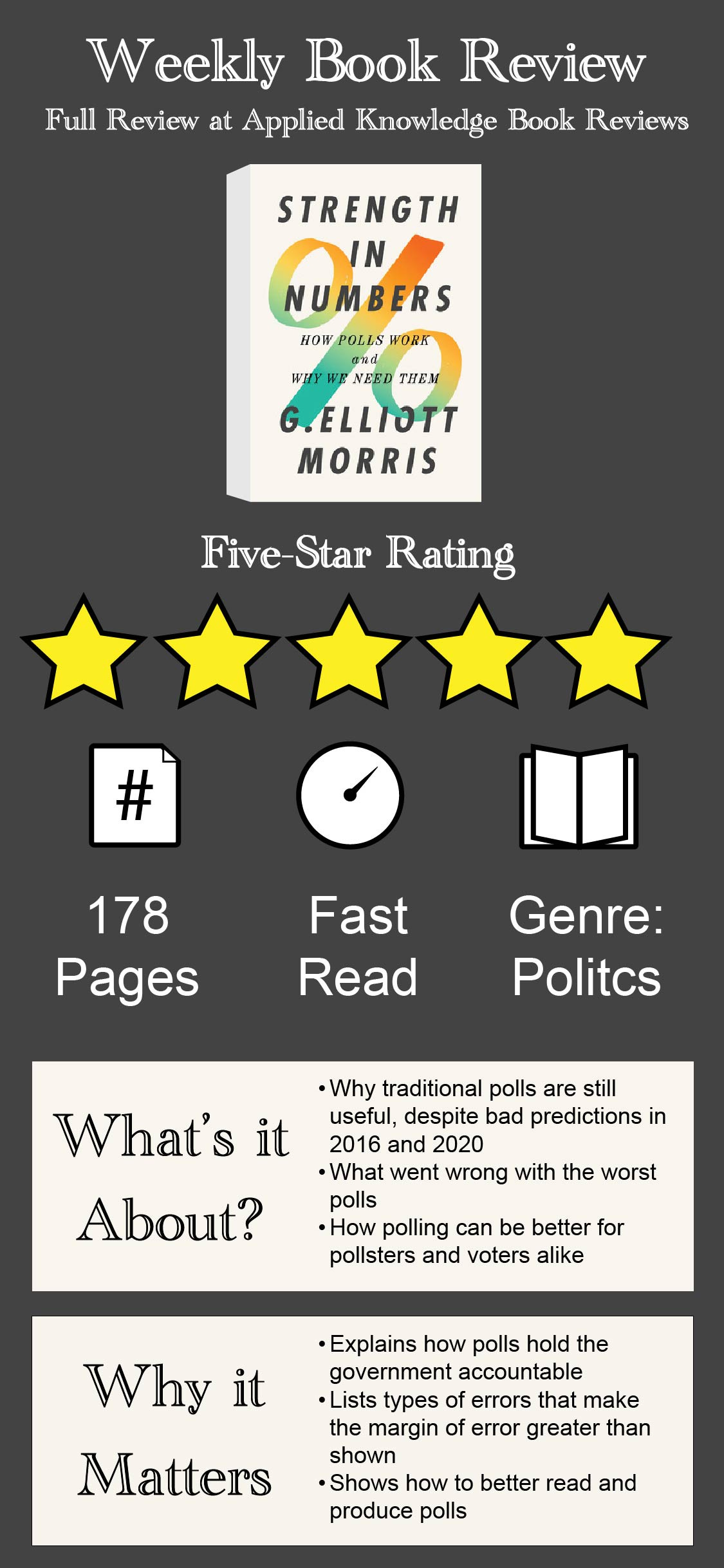

G. Elliott Morris' book shows you how the 2016 and 2020 presidential election polls went so wrong and how to evaluate political polls in the 2024 race.

Every major news site publishes polls, but how many people can judge how that poll was conducted?

G. Elliott Morris’ book, Strength in Numbers, offers some answers that anyone can use.

Understanding what’s important to voters and who they’re voting for is a check on elected officials as much as it’s content for the rest of us.

It’s also important to understand how 2016 and 2020 polls went so wrong so those of us who read poll numbers online don’t get false hope or optimism.

However, the third one is the trickiest nugget to implement. Polls have to keep up with changes among voters. If voters consider new issues important and pollsters miss those changes, they’ll repeat the predictive disaster of 2016.

Why Election Polls Are More Useful Than We Think

Political polls don’t just make predictions about the next election. They also keep politicians on their toes.

Early in the book, Morris introduces John Dewey, a 19th and 20th-century public opinion critic. Dewey believed that “government by experts” wasn’t enough for a functional democracy. Those experts needed to work on behalf of what the public wanted. Otherwise, the government would dissolve into an “oligarchy managed in the interests of the few.”

“Surely,” Morris writes, “the people, together, can point out the things that are wrong; a good government can handle the rest.”

Think about how polls are reported today. A recent NBC story didn’t just highlight key Biden poll numbers. The story went on to list the challenges he’d have to overcome as a result.

Whatever role social media or political ads play in changing voters’ minds, polls shape the way politicians run for office and determine their chances of staying there. At bottom, voters remain in charge, and polls are the best way to collect their preferences.

How 2016 Went so Wrong and How to Avoid Bad Polls

The upset of 2016 shattered many people’s faith in professional polling.

Shortly before 6:00 PM on election night, the New York Times forecasted Hillary Clinton’s chance of victory at over 80%. Before midnight, it had dropped to 5%, the model’s estimate of uncertainty.

Morris cites a Times investigation that found that “nearly half of the respondents in a typical national poll had at least a bachelor’s degree. But the percentage of college graduates among the actual population is only 28%.”

Voters with college degrees tend to vote Democratic and have been trending in that direction for decades. With so many more likely Democratic voters represented, it’s no wonder that Clinton seemed to have such a commanding lead until election night.

Pollsters weren’t stupid for not controlling for education. Andrew Smith, the director of the University of New Hampshire poll, hadn’t used it in the past and still produced accurate polls. He wrote to Morris that “when we include a weight for level of education, our predictions match the final number.”

Even though Biden won the 2020 election, 2020 polls overrepresented Democrats again. Morris notes that Nate Silver had Biden winning by eight points in Wisconsin when he only won the state by 0.6.

The issue with national polls for the second presidential election in a row? “They are not reaching Republican voters,” Morris writes.

How to Judge How Wrong an Election Poll May Be

Anyone who reads a poll can ask two questions:

Who did this poll survey?

How much error has this poll disclosed?

Understanding who the poll surveyed may require digging. Survey methodologies may be in the article reporting the poll a separate link on the pollster’s site.

Checking whether a poll’s methodology breaks the audience down by education is a good start. Education level divided voters in 2016, 2020, and will do so again in 2024.

Readers can also check how polls are conducted. Democrats were more likely to answer phone and internet surveys in the last two presidential election cycles. The different response rates stem partly from a new voter issue: social trust.

Morris quotes progressive pollster David Shor’s findings that social trust “used to be relatively uncorrelated with partisanship, and then in 2016 it was correlated with partisanship.“

If You Do Nothing Else, Double the Poll’s Margin of Error

Morris has an easy suggestion for anyone reading polls: double the margin of error.

He reminds readers that the margin of error that most polls report is only one type of error: sampling error, which comes from a sample that may be too small and exclude key respondents. However, Morris lists other sources of error, including:

Nonresponse error - people don’t fill the survey out

Measurement error - question wording skews people’s answers

Coverage error - pollsters missed a group in their survey

Methodological error - technical issues like how pollsters weight answers or code voters

After accounting for these additional sources of error, Morris found “that the true margin of error for a poll is at least twice as large as the one for sampling error alone.”

Pollsters predict everything that may throw a poll off ahead of time. Instead, we can follow Morris’ suggestion to become better consumers of polls and read them with greater skepticism.

Especially the polls that fill us with relief.